Live Programming

Live programming is commonly understood as the act of programming systems while they are already executing code. There are many communities, researchers, and ideas associated with the term, but they are not unified by it. These are my notes on live programming; it will slowly turn into an essay.

TODO Status

I’m still trying to find a way to elegantly put together my readings on live programming, so this is a work-in-progress.

Literature

This is not an extensive overview of live programming literature, but a sort of guided tour of ideas through papers.

Tanimoto cite:tanimoto_viva:_1990, and cite:tanimoto_perspective_2013

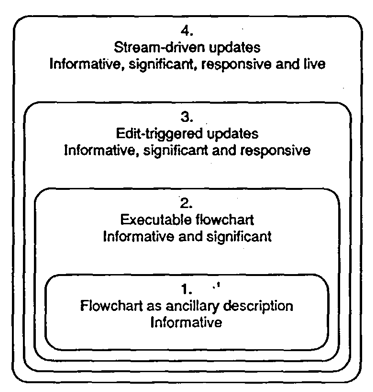

In VIVA: A Visual Language for Image Processing cite:tanimoto_viva:_1990, Tanimoto introduces a classification of “liveness” for visual languages. It has been often used as a measure for the liveness of programming environments, but adjusted accordingly for non-visual languages and other domains like music cite:church_liveness_nodate.

cite:tanimoto_viva:_1990

cite:tanimoto_viva:_1990

- Level 1 liveness

- originally referred to having some kind of visual document that expressed, but did not define, the behaviour of the system under discussion. e.g., notes/diagrams in a notebook, a printed out flowchart expressing business logic.

- Level 2 liveness

- refers to a representation of a system that can be executed. I think originally the idea was dataflow programs, but people use this to talk about normal source code also. The liveness is in the edit/compile/run cycle where the programmer has to make changes, then trigger the system and wait.

- Level 3 liveness

- is the property of a system that reacts quickly to edits, but still needs to be triggered.

- Level 4 liveness

- is level 3 liveness, but “online”. That is, instead of waiting for an edit to be signalled, the system will react at the earliest opportunity to source changes.

In A Perspective on the Evolution of Live Programming cite:tanimoto_perspective_2013, Tanimoto presents a little overview of live programming to date and extends liveness with two more levels. I haven’t seen these levels referenced much, but they describe a system that is more active and may anticipate future changes to the system. For example, the system might see you are creating a while loop and suggest boilerplate for you.

Evidently people find this idea valuable because it is used all the time in live programming literature, but it has received critique and extensions to keep it up to date. Notably liveness diagrams, which are feedback loops in the style of systems dynamics, in cite:church_liveness_nodate and cite:sorensen_systems_2017, and numerous clarifications of Tanimoto’s intent, as in cite:campusano_live_2017.

The actual content of the original paper, VIVA, receives little attention, but fits in with some common themes of live programming: education, and dataflow languages.

TODO Hancock cite:hancock_real-time_2003

Real-Time Programming and the Big Ideas of Computational Literacy is Hancock’s PhD thesis on developing two software environments for teaching programming to children. It is commonly cited for Hancock’s definition and discussion of the “steady frame”. In reference to a comparison between hitting a target with a bow and arrow in archery, and hitting the same target with a water hose, Hancock defines the steady frame as:

A way of organizing and representing a system or activity, such that

- relevant variables can be seen and/or manipulated at specific locations within the scene (the framing part), and

- these variables are defined and presented so as to be constantly present and constantly meaningful (the steady part).

Where in the example the relevant variable is aim, so the water hose provides a steady frame where the bow and arrow do not.

The steady frame is then one of the common ideas used to explain the value of continuous feedback that live programming delivers, and to make distinctions between continuous feedback that is useful and feedback that is not.

Some of the things I found interesting in this thesis:

- The parallel universe problem

- is the problem of having great tools that only exist in their own world that can’t really interact with the outside world. To take advantage of the tools you have to “move in” to the parallel universe. Smalltalk is often given as an example of a computing environment with liveness built-in, and due to its all-encompassing nature I think it would qualify for the parallel universe problem. Many many many live programming tools exist only as prototypes or embedded in some inaccessible world, so this is a real problem.

- Variables

- are discussed in the context of real-time and live programming. Hancock highlights particularly how children selected and used variables in their programs. Particularly, appropriate variables seemed to serve the steady frame of the program, but also improve the abstractions used in the programs to make them concise and easier to understand.

- Steps vs processes

- was one of the big ideas in the thesis. Hancock found that in Flogo I (the visual dataflow language), users were having trouble expressing mixed stateful/continuous systems. e.g., a robot driving to a point is continuous behaviour, and when it gets there it may need to swap (state) to a different continuous behaviour. Hancock then embedded these ideas into Flogo II (the text language), but found it hard to express the ideas in simple English that did not confuse children.

- Live programming as modelling

- TODO

Sean McDirmid on Hacker News:

Flogo 2 and Hancock’s dissertation are my goto for live programming origin work. – https://news.ycombinator.com/item?id=16100842

and again here https://news.ycombinator.com/item?id=7761705.

TODO Collins et al. cite:collins_live_2003

Live coding in laptop performance is equal parts manifesto and technical discussion. The authors don’t like pre-configured graphical interfaces and prefer the excitement of developing their own systems. What for? For live coding, an artistic performance, in this case it is music, performed by devising algorithms on the fly. It is simply trying to push the boundaries and to truly use the programming language as an instrument.

Live coding is often a source for bleeding edge ideas in live programming. Sorensen and Gardner cite:sorensen_systems_2017 highlight the real-time aspect of live audio production. At a sample rate of 44.1KHz, and with a fixed buffer size, there is only so much time to perform all the computation required for a large signal chain with many instruments and DSP operations. So live coders have taken to developing systems that meet these real-time requirements and provide live programming capabilities. That makes for interesting research.

This attitude towards live programming is different than what you see from people like McDirmid, Edwards, and Victor. There is less focus on education (excepting Sonic Pi), and on ideas that don’t make sense in the domain of music. There is some work that looks at features like reified computation, time travel, and rotoscoping (TODO insert citations), which don’t make sense in music and other real-time fields. That’s not to say live coders aren’t interested in making user friendly systems, but live algorithmic music is quite a specific goal.

The Extempore programming language cite:noauthor_extempore_nodate was born out of live coding and is trying to push into new domains. It is a live coding environment, but it has also been used a tool for scientific simulations and interactive multimedia experiences cite:sorensen_systems_2017. Sorensen talks about creating a “full-stack” experience by designing XTLang as a systems programming language that can offer efficient code, speak to C libraries at the ABI level, and “cannibalise” software live all the way down the stack to kind of introduce live programming aspects to existing libraries.

TODO:

- Show us your screens

- cite:magnusson_herding_2014

- cite:brown_aa-cell_2007

TODO McDirmid cite:mcdirmid_living_2007

What is Live Programming?

The term “Live Programming” is overloaded. In both practice and academia it has been used to describe many kinds of programming activities which provide the programmer with some form of live feedback. This is so general that it includes tools like autocomplete, and you could probably argue that typing into a text editor is also covered. To get a sense of the common uses of “live programming”, it’s helpful to look at some communities, research, and software.

Hancock

In Real-time Programming and the Big Ideas of Computational Literacy, Hancock is clear in his definition of “live”:

The code displays its own state as it runs. New pieces of code join into the computation as soon as they are added.

If displaying state and running code “as soon as” it is added are the what, then Hancock’s why is the steady frame. The steady frame is basically a way to say that it’s easier to understand systems or perform activities when you can see and manipulate relevant variables (framing), and that the variables are always defined and meaningful (steady).

Live Coding

Live coding is commo

Sean McDirmid’s work focuses on improving programmer experience, and his working definition of live programming seems to reflect that.

- mcdirmid, victor, edwards

- live coding, toplap, algorave

- clj, cljs, react, hot-reload, elm, flutter

- REPLs, common lisp

I use the terms “live coding” and “live programming” almost interchangeably, because to me the interesting aspect is the “liveness”. But I understand the desire to disambiguate, especially as someone who used to get upset when my Mum would call just about anything a “Game Boy” when it was really a Game Boy Color or a Game Boy Advance. So this is to be clear:

Live coding normally refers to a type of performance where an artist performs by programming music and or visuals “live”, or “on-the-fly”, or “just-in-time”. The hallmark of live coding is the projection of the programmer’s screen for the audience to see. You can get a sense of this in this video of Andrew Sorensen, which includes live commentary.

Live programming is the more general term which is the act of programming systems while they are executing code. There is a little nuance to this idea though.

Liveness by example

Imagine you are writing a program that draws a red circle on the screen:

def draw_circle(x, y): setFill('red') diameter = 100 ellipse(x, y, diameter, diameter) def main(): while True: draw_circle(50, 100) sleep(0.0167) # roughly 60FPS

You decide you no longer want a red circle because blue is a better colour. The typical edit cycle would be to close the running program, edit the source code in a text editor, and run it again to see the result. We’re going to say that is not live programming.

Imagine we repurpose the system so that you can now send it new function definitions while it is running, and the next time that function gets called it will run the new code. What you can do now is leave the program running, and then send over your new definition of draw_circle which draws a blue circle instead of a red one. The next time we go around the main loop we’ll get the blue circle without needing to restart the program.

Liveness is basically the degree of interactivity and immediacy offered by a system. We can see that the scenario where you send your new definition over and see it in use immediately is more live than the typical edit/run cycle.

Our original red circle program would be classified as level 2, whereas the updated program would be level 3.

You can probably imagine what our circle drawing program would be like at level 4 liveness. When we edit the source code to say 'blue', we would see the change immediately take effect.

What is Live Programming? (Redux)

Live programming is used to describe a few similar ideas all with live programmer feedback in common, but in different forms. By “live programmer feedback”, I mean feedback from the code or the running program as it is being edited.

To get a quick idea of live programming imagine you are writing a program that draws a red circle on the screen:

def draw_circle(x, y): setFill('red') diameter = 100 ellipse(x, y, diameter, diameter) def main(): while True: draw_circle(50, 100) sleep(0.0167) # roughly 60FPS

To change that circle from red to blue, you would need to edit the program and run it again. We can make this process faster by modifying the function draw_circle while the program is already running. Because the new version of the function will be called the next time we go through the main while loop, the circle will be redrawn as blue and you can see the effect of your change almost immediately. This is a basic form of live programming that has been available for decades with technology like REPLs, but there are more sophisticated forms of live programming like those found in the IDE Light Table.

2012 was undeniably a big year for live programming. Bret Victor’s Inventing on Principle and the followup, Learnable Programming, directly inspired Chris Granger’s IDE, called Light Table. The idea of live programming in Victor and Granger’s work was to bring programmers closer to their tools and program domains by visualising the execution of programs as they are being written. Light Table raised over US $300,000 on Kickstarter, showing there was wide interest in live programming.

We can use our circle drawing program for an example of execution visualisation. To understand how the program executes you need to run through the code in your head. You might imagine the function call draw_circle(50, 100) by substituting the arguments x=50, y=100 into the function body. You see that ellipse(50, 100, 100, 100) will be called. Why go through this process when we could ask the computer to do it for us? It might look something like this:

def draw_circle(x=50, y=100): setFill('red') diameter = 100 ellipse(x=50, y=100, diameter=100, diameter=100)

You no longer need to run the program in your head to see what values are being passed to ellipse. This type of visualisation is common in live programming environments. If we combine this technique with the live code update technique you can receive feedback from the program through code annotations and through the domain. In Inventing on Principle, Victor demos techniques like this.

Victor’s work had an impact on the academic world too. Sean McDirmid was “heavily inspired” by Victor to explore the same ideas in the YinYang language. In 2013’s Usable Live Programming, McDirmid defined live programming as “concurrently editing and debugging a program”, and he used the same idea: visualising program execution.

When Sean posted his paper to the programming languages website, Lambda the Ultimate (LtU), he included a brief history of live programming. This was criticised for ignoring developments in the field of live programming, particularly for not including languages used in audiovisual live coding.

Live coding is commonly used to describe an audiovisual performance where the programmer/artist writes code that produces music and or visuals. One of the hallmarks of a musical live coding performance is the projection of code for the audience to see. There are many more kinds of performance programming that are also considered to be live coding, including live dance choreography with programs. Magnusson cite:magnusson_herding_2014 discusses this.

Live coding is then live in the domain sense – the domain being sound. It’s not unusual to see live coders working in bare bones text editors without much programmer tooling like auto-complete. The focus on liveness in the domain seems to be what separates live coding from the work of Bret Victor and Sean McDirmid, who are focussed on liveness in programmer feedback, e.g. through the code or visualisation of its execution. Then it’s understandable that McDirmid opted to leave live coding out of his history of programming languages, but why the criticism on LtU?

For many, the terms “live coding” and “live programming” are interchangeable, and that seems completely reasonable. Both describe situations where the programmer is working on some code and the computer is responding as they are doing it. Not only is it responding, but it’s giving useful feedback for the programmer to continue work on the program. The goals of the programmers are different, producing a finished program vs performance music, and the difference in tools reflect that, but they come from the same ideas.

Live coding languages like Gibber, Extempore, Fluxus, and TidalCycles have been a source of technical innovation, HCI research, and generally some of the weirder ideas and systems you can find in computer science. Live coding is hard, and it’s necessary to develop systems like these to let programmers treat the computer like a musical instrument. To ignore the ideas of these systems is to ignore a history of novel methods for programmer interaction with live programs, and I think that is why McDirmid was criticised.

TODO State of Liveness

If live programming languages are better, why don’t we see them used more in the software industry? In Beating the Averages cite:graham_beating_2005, Paul Graham asserts that it’s easy to explain why a powerful programming language like Lisp isn’t more widely used. “The Blub Paradox” says that a programmer who uses Blub can’t see why anyone would use a less powerful language, but is unable to understand why someone would use a more powerful language because they don’t see them as such. Similarly, Graham points to the social difficulty of trying to adopt a different language in the workplace as one reason that big companies are slow to adopt more powerful technologies. It’s easy enough to see this argument applying to liveness as it does to programming languages in general, and even if it’s not true I think it’s fair to say that the history of popular programming languages has very little to do with the features that those languages offer. If it did, C wouldn’t have been the #1 TIOBE programming language in 2017. (TODO cite TIOBE)

Many live programming papers motivate live programming by pointing at non-live languages, and particularly the edit, comple, run cycle. (TODO citations cite:church_liveness_nodate) Not being mainstream is not a good reason to motivate live programming when there are large, niche programming language communities that celebrate liveness and use it to good effect in industry. Much in the spirit of Beating the Averages, there are companies using languages like Smalltalk (Pharo) and Clojure that are commercially successful. At a technical level, live programming also exists in tooling for popular languages, like React/Redux with hot reloading for JavaScript, and code hot-swapping in Erlang1. So it’s not fair to say that live programming is interesting because it isn’t mainstream; there’s sufficient variety and interest from the software industry that it can stand on its own legs.

Another assumption I have seen (TODO cite that mcdirmid presentation) is that by creating the live programming environment, the designer has to necessarily solve a difficult technical problem trading off levels of liveness, efficiency, consistency, side effects, and state to make a usable programming environment. This ignores the domain specific nature of much of programming, and turns what should be an engineering problem into a language design one. I don’t think all of these variables can be balanced in a way that makes sense for general purpose programming, and a better approach is to provide tools for programmers to bring liveness into their environments. There is evidence for this approach in Clojure, and in the live coding world we can see lots of domain specific liveness that doesn’t compromise usability.

The Promise of Live Programming cite:mcdirmid_promise_2016 is an overview of Live Programming and the design challenges it needs to overcome. The key challenge being improving programming:

The biggest challenge instead is live programming’s usefulness: how can its feedback significantly improve programmer performance?

The paper goes on to discuss ideas and implementations in the live programming literature like the steady frame, and live programming idea testbeds like Subtext (TODO cite). These weirder and somewhat esoteric programming systems are important because they continuously challenge and develop the ideas of live programming, but by looking elsewhere we can find different takes on liveness, particularly in application development, web development, and live coding communities.

TODO Clojure(script)

TODO: update this section in reference to the TouchDevelop paper, which has react/redux ideas before they were react/redux.

Clojure is a Lisp that targets the JVM. It is a dynamic programming language, and it’s typical to see developers using a REPL based workflow. What’s interesting though is that most of the liveness in Clojure has been added by developers over time through libraries. As an example, Mount and Component are both libraries that were written to solve the mismatch between code and program state when working with a REPL. To keep the program live, the programmer can write functions that reset stateful resources, and call them all at once whenever they need the system back in a known state. This is a problem that has been addressed in live programming literature (TODO cite), TODO.

Another idea popular in ClojureScript is to manage state in a global immutable database that can only be modified through pure functions that are triggered by events. This is a version of the command pattern, in which the idea is to wrap operations up in data. This doesn’t seem particularly connected to liveness, but it helps to enable a live workflow by centralising state. It’s common to see ClojureScript developers have their browser and editor open side-by-side, evaluating code in the editor and quickly seeing the changes propagate to the browser. Keeping all state in one place is a simple way to make this workflow possible. The state container idea caught on big in the JavaScript world through the libraries flux and Redux, and many developers now use it to enable a “hot reloading” workflow.

TODO – declarative UIs, MVVM, and state

Notebooks

TODO

Live coding

TODO

Implementations

- REPLs (lisps, python, ruby, ghci, …)

- JS hot-reloading

- Video game engines (especially old Naughty Dog software) https://www.gamasutra.com/view/feature/131394/postmortem_naughty_dogs_jak_and_.php

- Clojure and the reloaded workflow http://thinkrelevance.com/blog/2013/06/04/clojure-workflow-reloaded

- Sonic Pi, Extempore, ChucK, Gibber, Tidal, PD, MAX/MSP, …

Themes of Live Programming

Music

Education & Visual Programming Languages

Many people believe that tightening the programming feedback loop makes programming more engaging and easier to understand, so it’s common to see research that says “typically in programming you have an edit/compile/run cycle, but live programming lets us remove this and make programming easier”.

- Campusano & Fabry cite:campusano_live_2017

- “Typically, development of robot behavior entails writing the code, deploying it on a simulator or robot and running it in a test setting. … This process suffers from a long cognitive distance between the code and the resulting behavior, which slows down development and can make experimentation with different behaviors prohibitively expensive. In contrast, Live Programming tightens the feedback loop, minimizing the cognitive distance.”

- Resig cite:noauthor_john_nodate

- “In an environment that is truly responsive you can completely change the model of how a student learns: rather than following the typical write -> compile -> guess it works -> run tests to see if it worked model you can now immediately see the result and intuit how underlying systems inherently work without ever following an explicit explanation. … When code is so interactive, and the actual process of interacting with code is in-and-of-itself a learning process, it becomes very important to put code front-and-center to the learning experience.”

A commonly cited thesis on live programming and visual languages is Hancock’s /Real-Time Programming and the Big Ideas of Computational Literacy/cite:hancock_real-time_2003. This is an early push to get live programming into educational systems, but I think with better principles than others I have seen. Bateson’s comparison of continuous feedback

Robotics

TODO Time

cite:mcdirmid_programming_2014

cite:aaron_temporal_2014

Bibliography

Please forgive the formatting for the moment, this is a work in process. bibliographystyle:unsrt bibliography:~/Dropbox/Uni/2017/Sem2/Honours/bib/default.bib

Attach

Liveness levels ATTACH

Footnotes:

Not to mention dlopen and dlsym.